The next generation robot, R3, is going to be fixing most of the limitations of the current R2. The robot is a complete redesign from scratch on the software as well as the hardware side. It will use a heat based weeding concept that (as far as I know) has never been explored before. Additionally it is going to move from a pure concept robot like R1 and R2 to a full prototype with all essential features for practical use like full autonomous navigation and remote control. It is also going to support use for different crops. It is going to participate at Jugend forscht 2026.

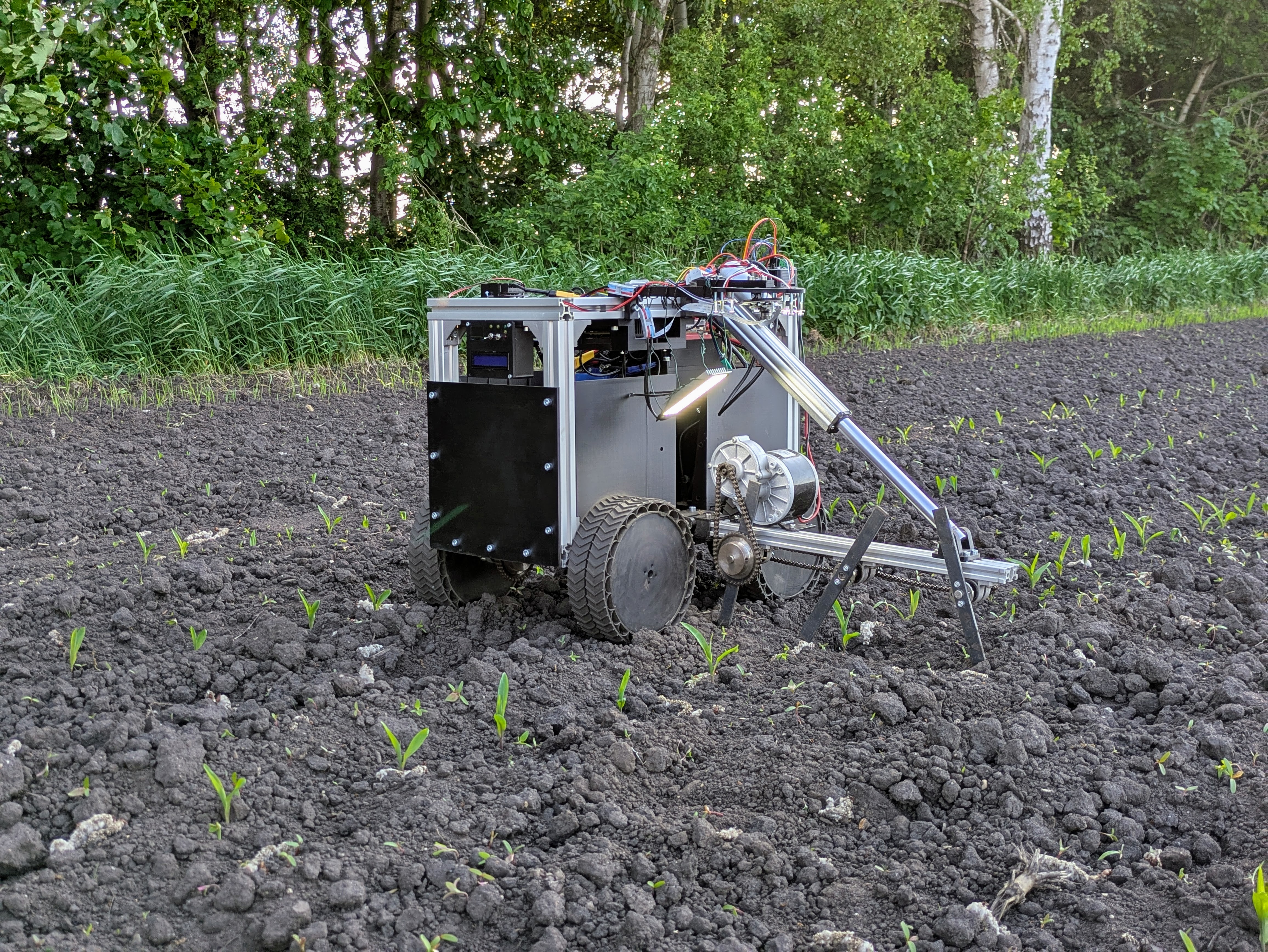

R2 is a compact field robot that uses AI to detect maize plants and remove unwanted companion vegetation mechanically between them. The main goal with R2 was to demonstrate, that such a robot can be developed cost effectively, which was succesfully achieved as the robot costs under 2000€ to build before any scaling. The platform prioritizes a practical, modular design: it combines on‑board object detection with dedicated microcontroller‑based subsystems for actuation and control. R2 weighs about 18 kg and measures roughly 37 × 40 × 50 cm (W × H × D).

The system is split into subsystems: object detection (main computer), weed removal, drive and power. A central microcontroller aggregates telemetry and mediates inter‑subsystem communication.

The object detection model is based on Yolo v11 and trained on a custom dataset of over 3000 labeled maize images. It is running on an Nvidia Jetson Orin Nano at around 25 frames per second. In tests the model achieved about 99.4% accuracy. Detections are aggregated across frames and adjusted with the robot's relative motion to reduce single-frame errors.

Removal is performed by three vertically rotating flail blades operating at about 100 RPM, driven by a 250 W brushed motor via chains. The blades are mounted on an aluminium extrusion that can be lifted by a linear actuator to avoid maize plants; the blades are stopped while the actuator is raised. Both the actuator and motor are controlled via BTS7960 H‑bridge drivers from the subsystem microcontroller.

R2 is driven by two 120 W brushed motors (front and rear). Motor control uses PID loops and magnetic encoders for speed feedback. Steering is not yet implemented. The 3D‑printed wheels feature a rigid PLA core with a softer TPU outer profile to improve traction and damping (diameter 20 cm).

Power is supplied by four prismatic LiFePO4 cells in series (3.2 V, 105 Ah each), providing a 12.8 V pack (~1.344 kWh). The pack is protected by a BMS with integrated fusing and an additional PV fuse is placed in series. The robot draws up to ~35 A in operation, giving an estimated runtime of roughly 6 hours; the electrical design uses a safety margin of 2.

At Jugend forscht 2025 R2 won on the regional and statewide level and participated at the national final. At the final as well as in the rounds before it also won the "Umwelttechnik" prize.

R1 was an early proof-of-concept prototype used to validate the basic approach, logic and object detection models. It demonstrated the feasability of privately developing such a robot. Lessons learned from R1 shaped the architecture used in R2.